- #ACROSYNC RESTORE FROM BACKUP SOFTWARE#

- #ACROSYNC RESTORE FROM BACKUP CODE#

- #ACROSYNC RESTORE FROM BACKUP LICENSE#

Realistically, all this would do is hog your bandwidth and ruin your performance. ignoring hash collisions as their probability should be orders of magnitude lower than a doomsday scenario), you could of course just transfer each chunk every time. filenames) you'll have to download per amount of data in the backups, which you can vary by adjusting the chunking size.Įdit: Of course, because S3's PUT OBJECT is idempotent in this case (i.e. The only question remaining would be the amount of data (i.e. You can then proceed to chunk your local files and upload the missing parts. If you name your chunk files after their hashes, that's all the info you need about which chunks you still have to upload. also borg backup and restic):įirst, you perform a GET BUCKET, which gives you a list of all files in the bucket.

#ACROSYNC RESTORE FROM BACKUP CODE#

I haven't read the code (read: speculation ahead!), but at least the "what's already there" part seems rather easy to me if the backups are performed in a chunk-based, deduplicated way (cf.

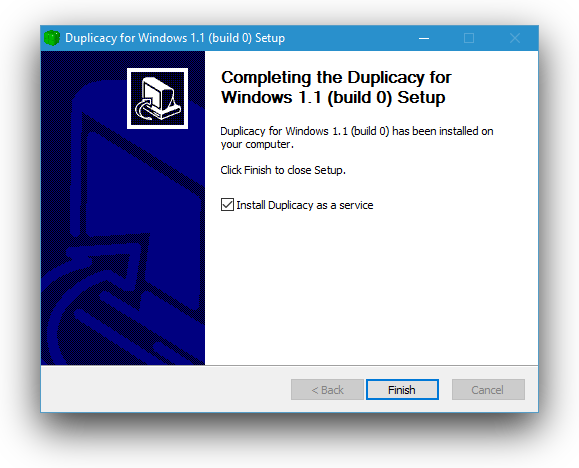

I also added a comment to that thread comparing Duplicacy with Arq based on my read of their documentation. One of our users wrote a long post ( ) comparing Duplicacy with other tools including Arq, based on his experience. Another implication is that the lock-free implementation is actually simpler without the chunk database and thus less error-prone. This is because Duplicacy saves each chunk as an individual file using its hash as the file name (as opposed to using a chunk database to maintain the mapping between chunks and actual files), so no locking is required with multiple clients. The main use case supported by Duplicacy but not any others including Duplicati and Arq is backing up multiple clients to the same storage while still taking advantage of cross-client deduplication. Instead, it checks the timestamps and sizes to identify modified files and only back up these files by defaults. Talking about pro-level bad will here.ĭuplicacy doesn't use the filesystem events APIs. Instead, it's very uncool to try to pollute an existing namespace of the same thing. Here's a few names I just devised: ClouDuplicate, Clouder, DupliCloud, CfC (cloud file cloud). Just so happens they're 2 letters different.

#ACROSYNC RESTORE FROM BACKUP LICENSE#

It's very much against the spirit of GitHub, and probably against the license on GH as well.Īnd it also is attempting to dilute another project that does similarly. This is simple copy which puts me in violation. And we're not even talking about developing on it, or submitting PR's, or what have you. As in, if I click clone, since I work for an employer of 50k people, I'm in violation. Their license, however, is very much NON-FREE. It's also asked you create a LICENSE file, to go along with this. If you want the free options on GH, you choose from a list of standard Open Source licenses. Github has commercial repos, and private repos.

(response, since I'm submitting 'too fast'.

#ACROSYNC RESTORE FROM BACKUP SOFTWARE#

Reported to Github, as Commercial software masquerading as various open free license projects (MIT, GPL, BSD, etc.).Īlso, intentional namespace pollution with existing backup tool, which IS gpl'ed.

0 kommentar(er)

0 kommentar(er)